Hey, cyber enthusiasts! How are you doing, guys?

TryHackMe has released the task for day 7 of the Advent of Cyber 2023. So here I am with it’s walkthrough. Throughout this walkthrough, you will learn different Linux commands with examples, log analysis, and solve challenges. Let’s understand what logs and log analysis are.

Log

A log file is like a digital trail of what’s happening behind the scenes in a computer or software application. It records important events, actions, errors, or information as they happen. It helps diagnose problems, monitor performance, and record what a program or application is doing. Let’s see an example of logs.

127.0.0.1 - - [01/Aug/2023:17:50:31 -0700] "GET /index.html HTTP/1.1" 200 336 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36" 127.0.0.1 - - [01/Aug/2023:17:50:32 -0700] "GET /styles.css HTTP/1.1" 200 428 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36" 127.0.0.1 - - [01/Aug/2023:17:50:32 -0700] "GET /scripts.js HTTP/1.1" 200 1235 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36" 10.0.0.1 - - [01/Aug/2023:17:50:33 -0700] "GET /contact.html HTTP/1.1" 404 173 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36"

Each line in the log file represents a single request made to the web server. Let’s breakdown the first log entry and understand it’s content.

127.0.0.1 - - [01/Aug/2023:17:50:31 -0700] "GET /index.html HTTP/1.1" 200 336 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36"

This is just a simple example, and the format and content of logs can vary depending on the software and operating system. However, the basic principles of log analysis are the same regardless of the specific format.

| IP address | 127.0.0.1 | The IP address of the client that made the request. |

| Date and time | [01/Aug/2023:17:50:31 -0700] | The date and time the request was made. GET : Request method: The type of request made, such as GET or POST. |

| Request method | GET | The type of request made, such as GET or POST. |

| Request URL | “GET /index.html HTTP/1.1” | The URL of the resource that was requested. 200 : HTTP status code: The HTTP status code returned by the server. |

| HTTP status code | 200 | The HTTP status code returned by the server. |

| Size of the response | 336 | The size of the response in bytes. |

| Referrer | “-“ | The URL of the page that linked to the requested resource. |

| User agent | ” Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36″ | The user agent of the client that made the request. |

I hope you now have a basic understanding of logs. If you encounter any log file, you can identify the content of that log file. I’m giving you a single task, from the given log entry, identify the elements.

158.32.51.188 - - [25/Oct/2023:09:11:14 +0000] "GET /robots.txt HTTP/1.1" 200 11173 "-" "curl/7.68.0

Log Analysis

Log analysis is the process of reviewing, interpreting, and understanding computer-generated records called logs. These logs provide valuable insights into the performance, health, and security of your systems. Several tools and resources are available for log analysis, such as:

| Log Management Systems (LMS) | Splunk, ELK Stack, Sumo Logic |

| SIEM (Security Information and Event Management) | ArcSight, IBM QRadar, RSA Security Analytics |

| Cloud-based Log Analysis Services | AWS CloudTrail, Azure Monitor, Google Cloud Logging |

| Open-source Log Analysis Tools | Logstash, Fluentd, Graylog |

| Visualization Tools | Kibana, Grafana, Tableau |

You don’t need to explore these tools for now. Let’s see what a proxy server is.

Proxy Server

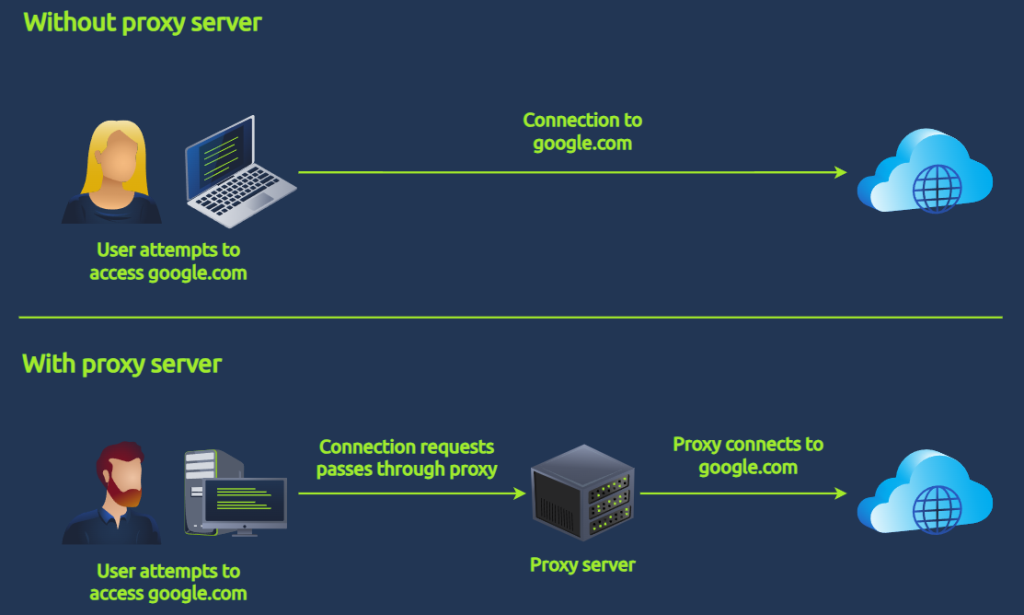

A proxy server is an intermediary between your computer or device and the internet. When you access a web page, your device connects to the proxy server instead of connecting directly to the target web server. The proxy server then forwards your request to the internet, receives the response, and sends it back to your device.

Without a proxy server, you connect to the web server directly, while with a proxy server, you first connect to the proxy server, then it forwards your request to the web server, and when the web server sends a response, the proxy server accepts it first and then sends the response to you.

A proxy server offers enhanced visibility into network traffic and user activities since it logs all web requests and responses. This enables system administrators and security analysts to monitor which websites users access, when, and how much bandwidth is used. It also allows administrators to enforce policies and block specific websites or content categories.

Now let’s explore basic Linux commands.

Learning Linux commands takes time and practice. Start with the basics, experiment with different commands and options, and see the examples given below ..

- ping: Check if a host is reachable and measure the round-trip time.

kali@kali:~$ ping google.com PING google.com (142.250.184.58) 56(84) bytes of data. 64 bytes from 142.250.184.58: icmp_seq=1 ttl=56 time=0.615 ms 64 bytes from 142.250.184.58: icmp_seq=2 ttl=56 time=0.598 ms …

- netstat: Show active network connections.

kali@kali:~$ netstat -a Active Internet connections (servers and established) Proto Recv-Q Send-Q Local Address Foreign Address State tcp 0 0 127.0.0.1:22 0.0.0.0:* LISTEN tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN …

- traceroute: Trace the path taken by packets to a specific host.

kali@kali:~$ traceroute google.com traceroute to google.com [142.250.184.58], 30 hops max, 60 byte packets 1 192.168.1.1 [192.168.1.1] 0.415 ms 0.533 ms 0.433 ms 2 8.8.8.8 [8.8.8.8] 1.36 ms 1.21 ms 1.14 ms …

- ip addr: Show information about network interfaces.

kali@kali:~$ ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

…

- wget: Download a file from a web server.

kali@kali:~$ wget https://example.com/example.txt

- cat: Display the contents of a file.

kali@kali:~$ cat example.txt This is an example text file.

- grep: Search a file for a specific pattern.

kali@kali:~$ grep "

- less : View a long file one page at a time

kali@kali:~/Downloads$ less example.txt This is an example text file.

- head : Show the first 2 lines of a file

kali@kali:~/Downloads$ head -n 2 example.txt This is an example text file. It contains some example text.

- tail : Display the last 3 log entries

kali@kali:~/Downloads$ tail -n 3 example.txt example text.

- wc : Count lines, words, and characters in a file

kali@kali:~/Downloads$ wc example.txt 25 125 754 example.txt

- nl : Add line numbers to a file

kali@kali:~/Downloads$ nl file1.txt 1 This is an example text file. 2 It contains some example text. 3 4 5 ...

Linux Pipes

Linux pipes are powerful tools for connecting commands and processing data efficiently. They allow you to send the output of one command as input to another, creating a chain of operations. This can save you time and effort by eliminating the need to save intermediate results to files.

Let’s see how Linux pipes work.

Execute the first command: The command generates its output as usual.

Use the pipe symbol (|): This symbol separates the first command from the second.

Execute the second command: This command receives the output of the first command as its input.

Let’s understand this with the help of an example.

root@kali:~# ls -l | grep "txt$" -rw-r--r-- 1 root root 754 Sep 20 20:22 example.txt

ls -l command lists the contents of the current directory with detailed information (long format)

| pipe symbol sends the output of the first command (ls -l) as input to the second command (grep)

grep “txt$” searches for lines ending with “.txt” in the input

The command outputs only the file with a “.txt” extension, displaying its detailed information (file type, permissions, owner, size, date, and time). In my case, the only file matching the criteria is “example.txt”.

Let’s have a look at common commands used with pipes:

grep: Filter text based on a pattern.

The grep command is used for searching text files and finding specific patterns. Examples are given here ..

I have a file named ‘example.txt’, and the content of this file is.

This is an example text file. It contains some random sentences. The quick brown fox jumps over the lazy dog. This is another sentence with different words. Now let’s see how we can use the grep command.

- Find lines containing “fox”:

root@kali:~/Downloads$ grep "fox" example.txt The quick brown fox jumps over the lazy dog.

- Find lines that start with “This”:

root@kali:~/Downloads$ grep "^This" example.txt This is an example text file. This is another sentence with different words.

- Find lines that end with “dog”:

root@kali:~/Downloads$ grep "dog$" example.txt The quick brown fox jumps over the lazy dog.

- Find lines that contain “fox” ignoring case:

root@kali:~/Downloads$ grep -i "fox" example.txt The quick brown fox jumps over the lazy dog.

- Find lines that contain “fox” and “jumps”:

root@kali:~/Downloads$ grep -w "fox jumps" example.txt The quick brown fox jumps over the lazy dog.

- Count the number of lines that contain “fox”:

root@kali:~/Downloads$ grep -c "fox" example.txt 1

- Find lines that do not contain “fox”:

root@kali:~/Downloads$ grep -v "fox" example.txt This is an example text file. It contains some random sentences. This is another sentence with different words.

You can explore other options for the ‘grep’ command by using the ‘man grep’ command. It will show the whole manual of ‘grep’.

sort: Sort text lines lexicographically.

The sort command in Linux is used for organizing and sorting data in text files. It can arrange lines alphabetically, numerically, or based on specific criteria, making it a valuable asset for data analysis and manipulation. Examples are given here ..

I have a file named “unsorted_data.txt” in the Downloads folder containing the following lines:

banana apple orange grapefruit mango

- Sort the lines in ascending order:

root@kali:~/Downloads$ sort unsorted_data.txt apple banana grapefruit mango orange

- Sort the lines in descending order:

root@kali:~/Downloads$ sort -r unsorted_data.txt orange mango grapefruit banana apple

- Sort the lines, ignoring case:

root@kali:~/Downloads$ sort -f unsorted_data.txt apple banana grapefruit mango orange

- Sort the lines numerically:

root@kali:~/Downloads$ sort -n unsorted_data.txt 11 17 20 23 27

- Sort the lines uniquely:

root@kali:~/Downloads$ sort -u unsorted_data.txt apple banana grapefruit mango orange

- Combine sort with head to display the first few lines:

root@kali:~/Downloads$ sort unsorted_data.txt | head -n 2 apple banana

- Combine sort with tail to display the last few lines:

root@kali:~/Downloads$ sort unsorted_data.txt | tail -n 2 mango orange

uniq: Remove duplicate lines.

The uniq command is used for removing duplicate lines from a file. It helps keep your data clean and organized, making it easier to analyze and process. Examples are given here ..

I have a file named data.txt containing the following lines:

apple banana orange apple mango banana orange

To remove duplicate lines from this file, you can use the following command:

root@kali:~$ cat data.txt | uniq apple banana orange mango

Count the number of occurrences of each line:

To count the number of times each line appears in the file, you can use the -c option:

root@kali:~$ cat data.txt | uniq -c 2 apple 2 banana 2 orange 1 mango

Display only duplicate lines:

To only display duplicate lines, you can use the -d option:

root@kali:~$ cat data.txt | uniq -d apple banana orange

cut: extract specific columns from text.

The cut command in Linux is a powerful tool for extracting specific parts of text from files or piped data. It allows you to select and display specific columns or bytes based on various criteria, making it a versatile tool for data manipulation and processing. Examples are given here ..

I have a file named “data.txt” in the Downloads folder containing the following comma-separated data:

Name,Age,City John,30,New York Jane,25,London David,40,Tokyo

- Extract the first column (Name):

root@kali:~/Downloads$ cut -d "," -f 1 data.txt Name John Jane David

- Extract the second column (Age):

root@kali:~/Downloads$ cut -d "," -f 2 data.txt Age 30 25 40

- Extract the first and third columns (Name and City):

root@kali:~/Downloads$ cut -d "," -f 1,3 data.txt Name,City John,New York Jane,London David,Tokyo

- Extract specific characters from a column (first 3 characters of City):

root@kali:~/Downloads$ cut -d "," -f 3 -c 1-3 data.txt New Lon Tok

awk : Perform complex text manipulation and calculations.

Awk allows you to filter, analyze, and manipulate data based on specific patterns. It excels at working with structured data like log files, CSV files, and tabular data. Examples are given here ..

I have a file named “data.txt” in my Downloads folder containing the following data:

John Doe,25,Engineer Jane Doe,30,Teacher Peter Smith,42,Manager Alice Brown,22,Student

- Print the first column (names):

root@kali:~/Downloads$ awk '{print $1}' data.txt

John Doe

Jane Doe

Peter Smith

Alice Brown

- Print specific columns (name and age):

root@kali:~/Downloads$ awk '{print $1, $2}' data.txt

John Doe 25

Jane Doe 30

Peter Smith 42

Alice Brown 22

- Print only lines where age is greater than 30:

root@kali:~/Downloads$ awk '$2 > 30' data.txt Jane Doe,30,Teacher Peter Smith,42,Manager

- Calculate the average age:

root@kali:~/Downloads$ awk '{sum += $2} END {print sum / NR}' data.txt

32.25

- Replace “Engineer” with “Software Engineer”:

root@kali:~/Downloads$ awk '$3 == "Engineer" {$3 = "Software Engineer"} 1' data.txt

John Doe,25,Software Engineer

Jane Doe,30,Teacher

Peter Smith,42,Manager

Alice Brown,22,Student

I think you have enough knowledge of Linux commands. So we can move to the challenges. They have given a log file named access.log.

With the help of this file, we need to answer the questions.

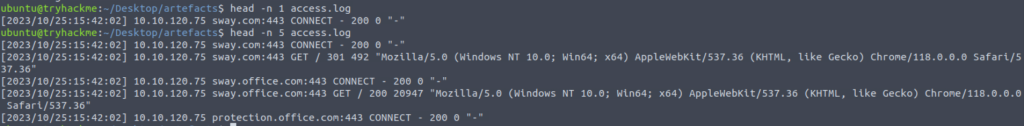

How many unique IP addresses are connected to the proxy server?

First, let’s see what the content of this proxy server is. Use the command

head -n 5 access.log

cut -d ‘ ‘ -f2 access.log | sort | uniq -c cut -d ‘ ‘ -f2 access.log | sort | uniq -c | wc -l

How many unique domains were accessed by all workstations?

cut -d ‘ ‘ -f3 access.log | cut -d ‘:’ -f1 | sort | uniq -c

cut -d ‘ ‘ -f3 access.log | cut -d ‘:’ -f1 | sort | uniq -c | wc -l

What status code is generated by the HTTP requests to the least accessed domain?

cut -d ‘ ‘ -f3,6 access.log | sort | uniq -c | sort -n | head -n 10

Based on the high count of connection attempts, what is the name of the suspicious domain?

cut -d ‘ ‘ -f3,6 access.log | sort | uniq -c | sort -n | tail -n 15

What is the source IP of the workstation that accessed the malicious domain?

cut -d ‘ ‘ -f2,3,6 access.log | sort | uniq -c | sort -n | tail -n 5

How many requests were made on the malicious domain in total?

cut -d ‘ ‘ -f2,3,6 access.log | sort | uniq -c | sort -n | tail -n 5

Having retrieved the exfiltrated data, what is the hidden flag?

grep “frostlings.bigbadstash.thm” access.log | head -n 5

grep “frostlings.bigbadstash.thm” access.log | cut -d ‘ ‘ -f5| head -n 5

grep “frostlings.bigbadstash.thm” access.log | cut -d ‘ ‘ -f5 | cut -d ‘=’ f2 | head -n 5

grep “frostlings.bigbadstash.thm” access.log | cut -d ‘ ‘ -f5 | cut -d ‘=’ f2 | base64 -d | head -n 5

grep “frostlings.bigbadstash.thm” access.log | cut -d ‘ ‘ -f5 | cut -d ‘=’ -f2 | base64 -d | grep “THM”

That’s all for this walkthrough.

If you face any issues, feel free to connect with me on LinkedIn and ask your doubts. I’ll be happy to help.

Check out our YouTube channel, Ethical Empire.

If you’re preparing for the CEH Practical Exam, don’t forget to check out our playlist, ‘CEH Practical Exam Preparation’.

Until next time, stay curious, stay secure, and keep exploring the fascinating world of cyber security.

![Hack The Box: [Easy]OSINT Challenges Writeup](https://ethical-empire.com/wp-content/uploads/2024/04/image-1-1024x535.jpeg)

![Hack The Box: [Medium] OSINT Challenges Writeup](https://ethical-empire.com/wp-content/uploads/2024/04/image-2-1024x535.jpeg)